I read this tweet from Andrew Wilkinson the other day:

A great thought experiment. Let’s pose it another way.

You own a newspaper business - what do you do to stave off total collapse in the next 20 years?

Off the top of my head:

Expand into the internet and build a subscription model with online + offline subscription types.

Build a community of readership prioritizing the local new.

Onboard your team out on how to use the internet toward their advantage. (research, reaching out to people on forums, etc…)

TBH - This is all very pretty easy to think out in hindsight. If you had known how big the internet was going to be in 1996 - then of course you would do these things.

But the real value of this thought experiment is to apply these learnings to AI. And each initiative follows a typical business defensibility lesson:

Expand into the internet → adoption of the new technology as the platform of the future

Building a community → own a moat through brand establishment and customer trust.

Onboarding your team → use AI to spearhead human capital and efficiency.

But out of all of these options - it’s easy to forget probably the smartest option of them all → to sell your newspaper business and build something on the internet.

What’s the Point in Following AI?

This brings me to my second point (and also goal of this newsletter) - how do you build a business in AI right now?

The biggest problem with Generative AI so far is how much value accrues towards OpenAI and existing companies versus new startups.

For example - am I really going to use Lex, an AI writing tool, when Notion AI comes out and all of my existing notes are on Notion? Of course not! I’d have to switch to a new writing tool that doesn’t have any of the existing SaaS features that Notion has.

There is an argument that AI research is fun, it’s cool to learn new things, and it’s interesting to follow. But why should you generate an AI product if it gets destroyed once GPT-4 comes out and blows all your prompt work out of the water.

So I come back to this thought experiment again on why it was important to research the development of the internet in 1996 when it was new. And one underlying advantage was just understanding how to hedge your risk.

The AI Development Curve

Previously I believed that there was some benefit to forecasting AI development for business advantage. For example - if I could predict what GPT-4 could do - I could research all of the available opportunities that have been unlocked between GPT-3 and GPT-4. If GPT-4 can talk to humans and pass the turing test 99% of the time while GPT-3 can do so 89% of the time - that margin is the difference between an okay customer support chatbot experience vs an amazing customer support experience.

But truthfully forecasting AI development should be more of an exercise to mitigate risk if you yourself are running a customer support agency!

In some ways - it’s a process of elimination for what you should and can invest in the future if you’re starting new businesses and looking for new markets. If the thesis is that AI will take over the world - then you want to be the last thing it ever takes over.

For example → if you’re running a marketing agency. In some sense - you’re just running a firm that has to train people to learn the newest and greatest growth hacks faster than others. This won’t necessarily be automated by AI because you’re essentially pitting AI + humans against AI tech algorithms.

Real Estate also exists as a slow bureaucratic wealth transfer with finite resources in the real world. Everyone needs a place to put their head down and regulation and NIMBYs (anti-housing people) will forever prevent the creation of an abundant amount of real estate. Not much AI can do to stop this unless the people that want to build get much better at AI law.

So let’s say we want to actually forecast AI development. How do we really measure the growth?

Well let’s look at the internet again in 1996. Most trends can go in one of two ways → they can systemically change how the entire world works as we apply more attention to different technologies.

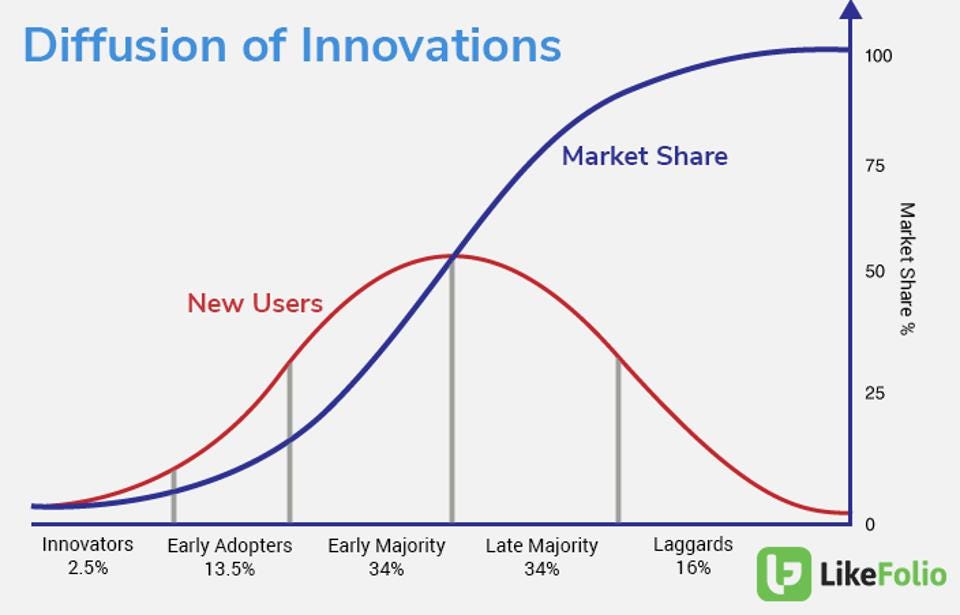

The rate of adoption of new technologies also follows a similar pattern. For example → when did Google become a necessity? When did losing your smartphone become like losing a limb?

I was on an airplane flying to Argentina when I realized the necessity of having WI-FI for a 10+ hour flight last month. Will I feel that way about AI in 10 years? Will I feel like I’ve lost a limb when I’m flying through space and my AI sidekick GPU has to be checked in to baggage claim?

Naturally it’s very hard to think about.

Conclusion

I think everyone agrees AI risk is rather existential. The new developments have pushed the adoption rate curve up with just one or two big new technologies (LLMs, Images, etc..)

Forecasting AI development is better for managing risk rather than creating new value in the near term. I’ll constantly be thinking about what businesses exist today that won’t be around in a few years. And what opportunities that will still exist today - that will also still be around in a few years as well.