Three Years of AI Hype

A post-mortem of what I got right and what I got wrong

About three years ago I wrote about this small growing trend called artificial intelligence. Jokes aside, in hindsight, I am happy to have been relatively early to this seismic technological revolution*[1].

The launch of ChatGPT however just a few months after my posts on AI business defensibility and growth immediately made parts of my predictions hilariously irrelevant overnight.

But in the last three years we’ve seen insane AI development. LLMs got much smarter with advanced reasoning and multi-modal capabilities. While on the application layer we got vibe coding and an explosion of AI startups raising billions of dollars.

Three years later, I wanted to revisit what I got right and wrong, see what it reveals about AI’s potential trajectory from today, and make some new predictions to test against the future.

Distribution Trumped Everything

In my October 2022 post AI Business Defensibility, I was skeptical that companies building user interfaces on top of GPT-3 could survive. My reasoning was simple: when spinning up a new feature is as easy as writing a text prompt to copy your competitors, you have no competitive moat. I quoted Allen Cheng’s framework:

“If it’s easy to make a good-enough app out of the box, the barriers to entry are mercilessly low. Dozens of competitors for your idea will spring up literally overnight, as they already have in these Twitter demos... If GPT-3 is so easy to adopt and build products with, incumbents will do it too.”

And specifically in the best AI startup ideas won’t be selling AI, I chronicled the rise of Jasper.ai, an AI copywriting business, as the biggest AI success story in 2022 when they raised at a $1.5 billion dollar valuation. My prediction was that the the success of their execution would depend on leveraging AI as a growth vector.

And just two months later, ChatGPT launched and obliterated my thesis overnight.

It was immediately clear that Jasper.ai’s main selling point was now just one feature buried inside of ChatGPT’s endless use cases. For $20/month, consumers got an all-in-one productivity app that did everything Jasper did and more. And within a year, Jasper’s CEO stepped down and they pivoted to enterprise.

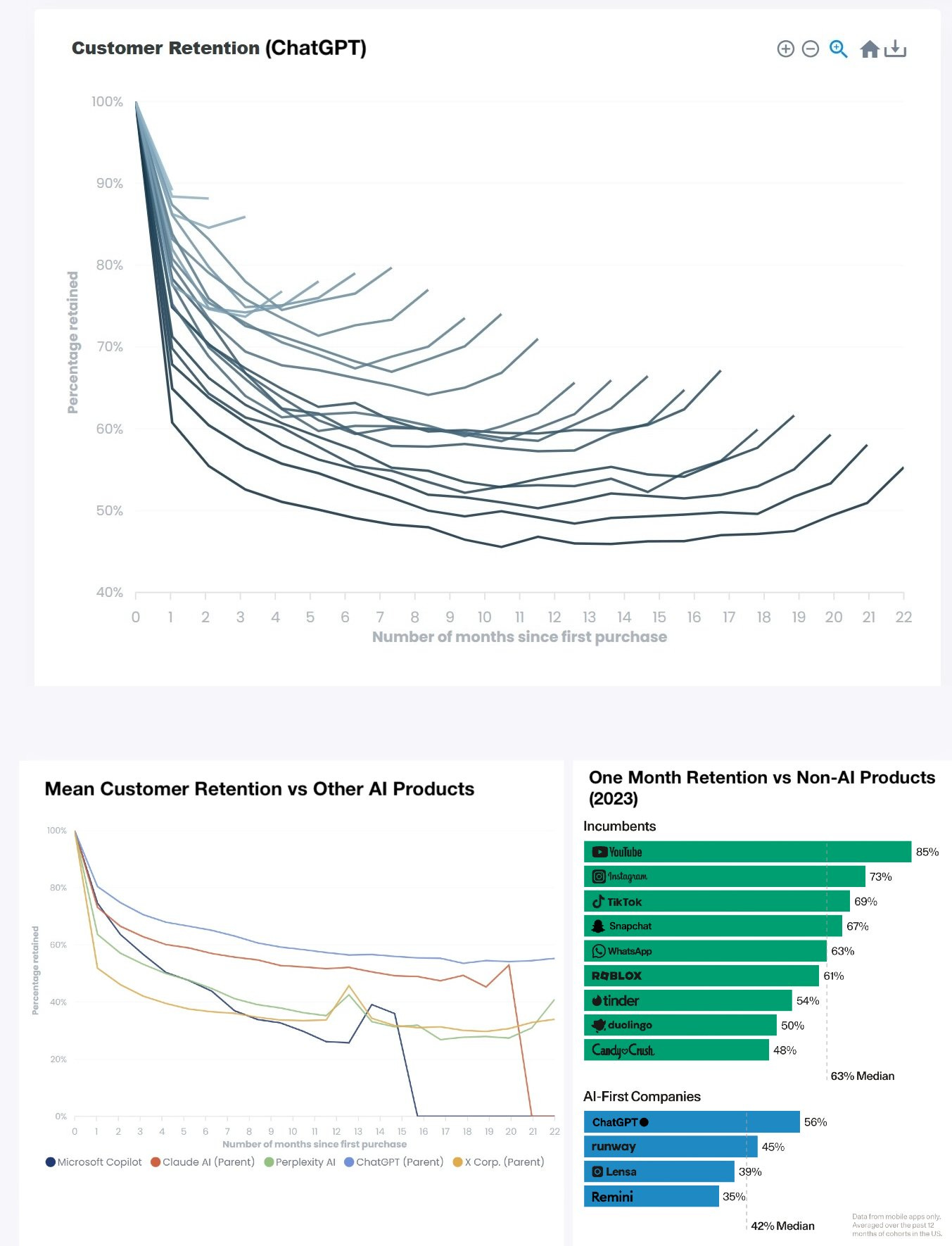

But Jasper wasn’t alone. In my analysis pre-ChatGPT, I assumed OpenAI would remain an enterprise B2B company, exposing APIs while startups built consumer applications on top. What no one saw coming was OpenAI weaponizing ChatGPT’s accidental virality into a dominant consumer business. The economic surplus in AI went almost entirely to OpenAI and end consumers, not startups.

The pattern became predictable: a startup would go viral with a new AI capability, then OpenAI would integrate it into ChatGPT. Copywriting tools like Jasper and Copy.ai were obliterated overnight. Image generation like Lensa’s avatar tool became a DALL-E integration. Search wrappers like Perplexity became ChatGPT with web search. OpenAI cherry-picked whatever maximized user acquisition for their everything-app strategy.

But even then, I underestimated how much money these apps made before getting bulldozed. Lensa for example generated $100 million from AI avatars at peak hype. Cal.AI hit $10+ million from calorie counting with ChatGPT’s image to text functionality. These startups capitalized on step-changes in AI capabilities, went viral, and extracted value in a compressed window before OpenAI commoditized them or copycats saturated the market.

The exception was vertical AI products that built defensibility beyond just the model. While they rode early distribution from LLM improvements, survival required actual lock-in through regulation, workflow integration, or switching costs.

The Middle Layer Would Be Defensible

In AI Business Defensibility, I believed the real opportunity was in what Sam Altman called the “middle layer”, startups that would take large models and build something defensible on top through proprietary data and fine-tuning. From Sam Altman himself:

“I think there’ll be a whole new set of startups that take an existing very large model of the future and tune it... those companies will create a lot of enduring value because they will have a special version of it. They won’t have to have created the base model, but they will have created something they can use just for themselves or share with others that has this unique data flywheel going that improves over time.”

My theory was straightforward: companies that could generate proprietary training data through user feedback would build defensible moats. The assumption was that these businesses would create better fine-tuned models through harvesting their own data flywheels.

The middle layer has since worked with companies like Cursor (coding), Harvey (legal AI), and Samaya (finance AI) raising hundreds of millions of dollars and using that money to go after specific enterprise companies in those verticals. But what I got wrong was that they didn’t win through better fine-tuned models: instead it came from specific workflow integration within those vertical industries.

Healthcare has HIPAA, finance has data isolation requirements, legal has attorney-client privilege. Once you’re integrated into a law firm’s case management system or a hospital’s EMR, you’re incredibly hard to rip out. The switching cost isn’t model quality: it’s re-training staff, rebuilding integrations, and risking operational disruption.

Additionally trust and liability is one where ChatGPT’s consumer reputation and distribution has made it harder for OpenAI to actually pursue their enterprise strategy. When you work in an industry that needs high precision and almost zero false positives or negatives, you go to companies that are essentially selling insurance and accountability wrapped in an AI interface.

The contrast with Jasper is instructive though. When ChatGPT came out and was “good enough” at copywriting for $20/month, Jasper had nothing to fall back on. Harvey, by contrast, picked a domain where “good enough” wasn’t good enough. Legal work requires accuracy, audit trails, and liability protection. Even if ChatGPT’s underlying model is comparable to Harvey’s, law firms can’t just use ChatGPT directly.

While I’m sure Harvey has a pitch that it will eventually generate a better model through continued usage, “data moat” turned out to be about workflow integration and accuracy over edge cases. Cohere learned this the hard way as they pitched themselves as an enterprise LLM but got fractured by vertical-specific startups that owned the compliance and integration layers.

The main contrast to the regulatory vertical argument was in coding agents like Cursor. Cursor proved you didn’t need regulation to build a moat. They bet LLMs would keep improving and went model-agnostic, focusing instead on building the best coding interface. Their advantage comes from scale as the more engineers use Cursor, the more data for optimizing prompts and context to improve the experience.

But I thought it would be a technical moat built on better fine-tuned models. Instead, it turned out to be an operational moat, either through regulatory capture and workflow lock-in (Harvey, Samaya) or through distribution and superior user experience (Cursor). In both cases, execution mattered more than model quality.

I Had the Value Chain Completely Backwards

One bigger mistake I made was narrowly constraining my understanding of AI to the only use case that I and many other understood at the time, which was copywriting. At the end of the best AI startup ideas won’t be selling AI I made my picks and shovels analogy:

You have companies like Jasper and Copy.Ai selling shovels in the gold rush. Basically building growth and revenue facing AI tools.

You have the businesses that leverage these tools as the companies mining the gold. So this could be a marketing agency that uses these tools to execute on their clients strategies better. Or just any company that wants to grow faster.

And lastly you have creators, teachers, and agencies that could sell courses on how to hire diggers and get them the latest AI shovels to mine gold a little faster.

When ChatGPT launched, my biggest mistake was not readjusting my mental model. I didn’t broaden my view that further step changes were on the horizon. It’s now clear that the real picks and shovels was in the race to develop ever increasingly intelligent LLMs built on the equation of Human Data + GPUs = Intelligence.

At the time I had no idea how LLM models were actually trained. And given this lack of knowledge, I didn’t think to invest in a company like Nvidia early on when I wrote The New Magic of AI. I didn’t understand the rate of return or the insatiable demand for compute. I was too focused on applications to see the infrastructure play staring me in the face.

Similarly on the human data side, back in February of 2024 I noticed how Reddit was expanding it’s partnerships and becoming an ever increasing training dataset for LLMs. Reddit became valuable precisely because it’s one of the last places on the internet for harvesting high-quality human content at scale. And while I did question The external feedback loop I predicted coming from AI generated slop in SEO did end up making authentic human data sources exponentially more valuable.

Which leads me to the brutal lesson of the last three years, in how as an entrepreneur, it’s easy to see everything as an opportunity to build and explore when the best move might be to follow the money and invest. I was early into understanding that this was the next wave, but didn’t have the capacity to build nor find an idea that felt entirely non-defensible on the application layer. And so I withheld both building investment into any companies besides OpenAI and Anthropic, of which I had no access to anyways.

What does the future hold?

The money pumped into AI by November 2025 has been staggering and beyond anything I imagined. But predicting the next step change feels even harder than before as AI labs are shrouded in secrecy, competing in a trillion-dollar race to AGI.

Yet with the release of GPT-5, we seem to have hit scaling limits. Diminishing returns on human data and compute have shifted innovation from building better base models to engineering better outputs. The focus is now on the agentic layer: better data pipelines, accuracy, and context retrieval rather than just scaling parameters.

This could give application layer companies some more breathing room. For example we’re now finally seeing companies like Amazon launch Alexa+ with generative AI enhancements and Snowflake launch Intelligence with text to analysis features. But the bigger question is whether enterprises can deliver real ROI from all the AI investments they’ve made. Companies have poured billions into R&D and infrastructure without seeing returns yet. There’s a lot of “bubble money” betting those returns materialize before investors lose patience.

For workers, the outlook is a bit more ominous. As LLMs become more generalizable across coding, marketing, and operations, companies will figure out which humans they don’t need. Layoffs seem inevitable alongside up-skilling for AI engineering roles. Teams are becoming managers of agents rather than doers.

Lastly the democratization of AI has made everyone more productive. While it was nascent three years ago, it is clearly being force fed down everyone in corporate America’s throats. But it’s also made this feel less like a technological revolution and more like an intense pressure to compete in a never-ending battle. Ask YC founders about their mission all you want but this is still a gold rush. Reaching AGI might be Sam Altman’s endgame. But for the rest of us, I’m not so sure what we want.

[1] I never followed through completely on turning this newsletter into it’s namesake on data and artificial intelligence